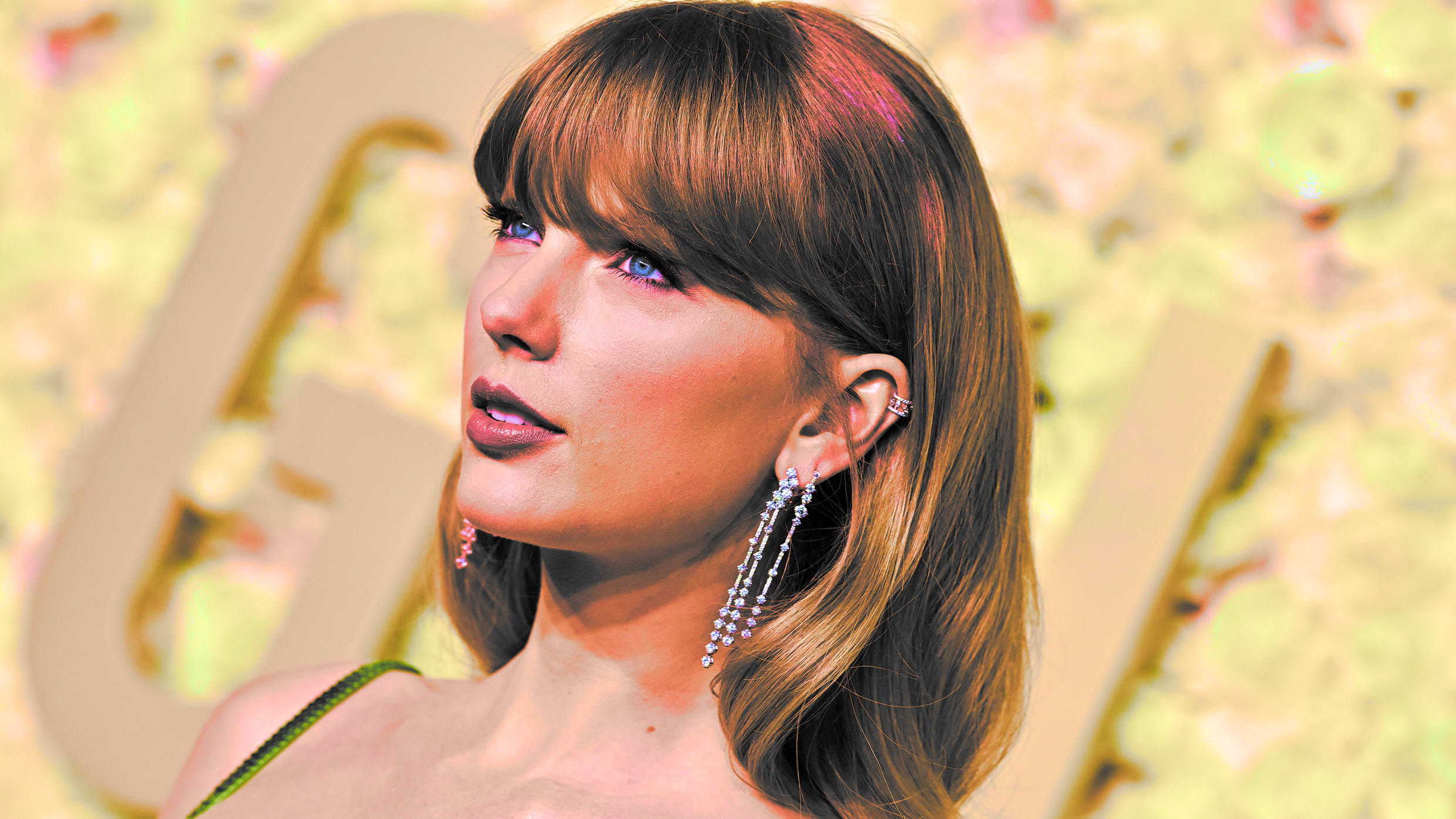

Explicit AI Images Of Taylor Swift: The Buzz, The Facts, And What It All Means

Alright, let’s dive right into it. If you’ve been scrolling through social media or lurking on tech forums lately, you’ve probably stumbled upon the buzz around explicit AI images of Taylor Swift. It’s a topic that’s sparking heated debates, raising ethical questions, and leaving fans with a mix of curiosity and concern. Whether you’re a Swiftie or just someone intrigued by the intersection of AI and pop culture, this is a conversation you don’t want to miss.

Now, before we get too deep into the weeds, let’s level-set. Taylor Swift, the global superstar who needs no introduction, has once again found herself at the center of a tech-driven controversy. AI-generated images, especially those that toe the line of explicit content, have created a storm in the digital world. But what exactly are these images, and why are they causing such a stir? That’s what we’re here to unpack.

This isn’t just about Taylor Swift, though. It’s about the broader implications of AI technology, the ethical boundaries we’re navigating, and how celebrities are increasingly becoming targets of digital manipulation. So, whether you’re here for the scoop on Taylor or the bigger picture, buckle up because we’re about to break it all down.

Read also:Papa Roach Lead Singer A Deep Dive Into The Iconic Voice Behind The Hits

What Are Explicit AI Images?

Let’s start with the basics. Explicit AI images are digitally created visuals that mimic real-life photographs but are generated entirely by artificial intelligence. These images can range from harmless fan art to content that’s, well, not so family-friendly. The technology behind AI image generation has advanced so rapidly that it’s now possible to create hyper-realistic images of anyone, including celebrities like Taylor Swift.

Here’s the kicker: these images aren’t just limited to stills. Some AI tools can even generate videos, making the line between reality and digital fabrication blurrier than ever. And while the technology itself isn’t inherently bad, it’s the way it’s being used that’s raising eyebrows. Think about it—would you want someone creating explicit content using your likeness without your consent? Yeah, didn’t think so.

Why Taylor Swift Is at the Center of This Storm

Taylor Swift, with her massive fan base and constant presence in the media, has become a prime target for AI image creators. Her popularity makes her a magnet for both fans and trolls alike. But beyond the obvious reasons, there’s something deeper at play here. Taylor has always been vocal about her privacy and the importance of consent, which makes the creation of explicit AI images even more controversial in her case.

Here’s a fun fact: Taylor Swift isn’t the first celebrity to face this issue, but she’s definitely one of the most high-profile cases. From deepfake videos to AI-generated photos, celebrities are increasingly finding themselves in situations where their digital likeness is being used without permission. And as the tech becomes more accessible, the problem is only going to grow.

Understanding Taylor Swift’s Stance on Privacy

Taylor has always been a strong advocate for artists’ rights and privacy. She’s spoken out numerous times about the importance of respecting boundaries, both online and offline. In an interview with Rolling Stone, she once said, “The internet can be a wonderful place, but it can also be a terrifying one if people don’t respect each other’s privacy.”

When it comes to AI-generated content, Taylor’s stance is clear: consent matters. And while she hasn’t directly addressed the issue of explicit AI images, her past statements give us a pretty good idea of where she stands. It’s not just about protecting herself—it’s about setting a precedent for how we treat others in the digital age.

Read also:Funko Pop Mash The Ultimate Guide To Collectible Crossovers

The Tech Behind Explicit AI Images

So, how exactly are these images created? The process starts with AI models like DALL-E or Stable Diffusion, which are trained on vast datasets of images. These models learn to recognize patterns and generate new visuals based on prompts given by users. For example, someone could input a prompt like “Taylor Swift in a swimsuit,” and the AI would generate an image that fits that description.

But here’s the thing: these models don’t just create images out of thin air. They rely on existing data, which means they’re essentially remixing what’s already out there. And if that data includes explicit or sensitive content, well, you can see where things might go wrong. The technology itself isn’t evil—it’s the intentions behind its use that we need to scrutinize.

Is It Legal? The Gray Area of AI-Generated Content

Now, let’s talk legality. The laws surrounding AI-generated content are still in their infancy, and there’s a lot of gray area. In many jurisdictions, creating AI images isn’t explicitly illegal unless they’re used for malicious purposes, like revenge porn or identity theft. But here’s the catch: just because something isn’t illegal doesn’t mean it’s ethical.

For Taylor Swift and other celebrities, the issue goes beyond legality. It’s about respect, consent, and the right to control one’s digital image. And as the technology continues to evolve, it’s likely we’ll see more discussions—and possibly even new laws—around how AI can and cannot be used.

The Impact on Fans and the Public

Let’s not forget the fans. Taylor Swift’s loyal fanbase, the Swifties, are some of the most passionate and protective fans in the world. When it comes to explicit AI images, many Swifties are understandably upset. They see it as a violation of Taylor’s privacy and a betrayal of trust.

But it’s not just about Taylor. This issue affects everyone. As AI becomes more prevalent, the potential for misuse grows. Imagine a world where anyone can create realistic images or videos of you without your consent. Scary, right? That’s why it’s important for all of us to be aware of the implications and advocate for responsible AI use.

How Fans Are Fighting Back

Fans aren’t just sitting back and letting this happen. They’re taking action by raising awareness, reporting problematic content, and even lobbying for stricter regulations. Social media platforms like Twitter and Reddit have become battlegrounds for these discussions, with fans and tech enthusiasts alike weighing in on the issue.

Some fans are also using humor to combat the problem. Memes and parodies have become a way to highlight the absurdity of AI-generated content while keeping the conversation lighthearted. It’s a reminder that even in the face of controversy, we can find ways to laugh and connect.

The Broader Implications of AI in Pop Culture

Taylor Swift’s situation is just one example of how AI is reshaping the entertainment industry. From deepfake music videos to AI-generated lyrics, the possibilities—and the challenges—are endless. But as we embrace these new technologies, we need to be mindful of their impact on artists and their work.

Here’s the thing: AI isn’t going away anytime soon. In fact, it’s only going to become more integrated into our daily lives. The question is, how do we ensure that it’s used responsibly? It’s a question that affects not just Taylor Swift, but every artist, creator, and consumer out there.

What Can We Do Moving Forward?

There are a few steps we can take to address the issue of explicit AI images. First, we need to educate ourselves and others about the technology and its potential risks. Second, we need to advocate for stronger regulations and ethical guidelines. And finally, we need to support artists like Taylor Swift who are standing up for their rights and setting a positive example.

Remember, the choices we make today will shape the future of AI and its role in our lives. So, let’s make them count.

Data and Statistics: The Numbers Behind AI Misuse

Let’s talk numbers for a moment. According to a report by the AI Now Institute, instances of AI misuse have increased by 300% over the past two years. And while not all of that misuse involves explicit content, a significant portion does. In fact, a survey conducted by a cybersecurity firm found that 45% of AI-generated images online contain explicit or sensitive material.

These numbers are alarming, but they also highlight the urgency of the issue. As AI becomes more accessible, the potential for misuse grows. That’s why it’s crucial for tech companies, policymakers, and individuals to work together to find solutions.

Who’s Responsible for Regulating AI?

The question of regulation is a tricky one. Should it be up to tech companies to police their own platforms, or should governments step in with stricter laws? The answer, as with most things, probably lies somewhere in the middle. Collaboration between stakeholders is key to creating effective solutions.

Some companies, like Meta and Google, have already taken steps to regulate AI-generated content on their platforms. But more needs to be done, especially when it comes to protecting the rights of individuals whose likenesses are being used without consent.

Conclusion: Where Do We Go From Here?

As we’ve explored in this article, the issue of explicit AI images of Taylor Swift is complex and multifaceted. It’s not just about one celebrity or one piece of technology—it’s about the broader implications of AI in our lives. From privacy concerns to ethical dilemmas, there’s a lot to unpack.

So, what can you do? Start by educating yourself and others about the technology and its potential risks. Advocate for stronger regulations and ethical guidelines. And most importantly, support artists like Taylor Swift who are standing up for their rights and setting a positive example.

And hey, don’t forget to share this article with your friends. The more people who are aware of the issue, the better equipped we’ll be to tackle it together. Because at the end of the day, it’s all about respect, responsibility, and doing the right thing.

Table of Contents

- What Are Explicit AI Images?

- Why Taylor Swift Is at the Center of This Storm

- Understanding Taylor Swift’s Stance on Privacy

- The Tech Behind Explicit AI Images

- Is It Legal? The Gray Area of AI-Generated Content

- The Impact on Fans and the Public

- How Fans Are Fighting Back

- The Broader Implications of AI in Pop Culture

- What Can We Do Moving Forward?

- Data and Statistics: The Numbers Behind AI Misuse

Article Recommendations